SESLA Transcriber - User Manual

1 Introduction

Sesla Transcriber is a tool for efficient speech transcription / labeling. Our goal is to make this manual work as time-efficient and budget-friendly as possible. Besides correction of an erroneous speech transcript, the tool supports transcription from scratch, and more general forms of labeling (e.g. translation, sentiment annotation, etc.).

The main innovation is a feature for budget-friendly transcription, that uses an automatically created transcript as a starting point, and guides the transcriber through the correction of a selection of segments that are likely to contain errors. The general strategy is to focus only on those erroneous parts, and trust the speech recognizer for other parts, in order to reduce the amount of manual effort. The tool asks the user to specify a time-budget (for example, 30 minutes of annotation), and automatically chooses an appropriate number of segments for correction such that the time-budget is kept. The locations and sizes of these segments are chosen such that the expected reduction of errors is maximized given the time budget, according to the Sesla method (Segmentation for Efficient Supervised Language Annotation) as described in [sperber2014tacl]. Savings in human effort of 25% are reported in [sperber2014tacl], compared to the traditional, cost-insensitive approach of choosing low-confident segments from a fixed segmentation. To choose segments for correction, we employ predictive models that estimate the necessary correction time and the number of errors in every potential segment. Errors are predicted using confidence scores from the automatic speech recognizer. The cost model is trained on-the-fly while the transcriber is working, starting from a transcriber-independent cost model for a new transcriber and over time predicting the particular transcriber more and more accurately. Upon updating the cost model, the choice of segments is also updated in order to benefit from the improved cost estimates. This approach has been described in [sperber2014slt]. The user interface was designed to be easy to learn and efficient to use. It allows either transcribing each segment from scratch or post-editing, and has logging features that allow detailed user studies.

2 Illustration of Transcription Approaches

This section shows a few examples of Sesla Transcriber’s features. A detailed explanation for how to use these features will be given in Section 3.

2.1 Budgeted transcription

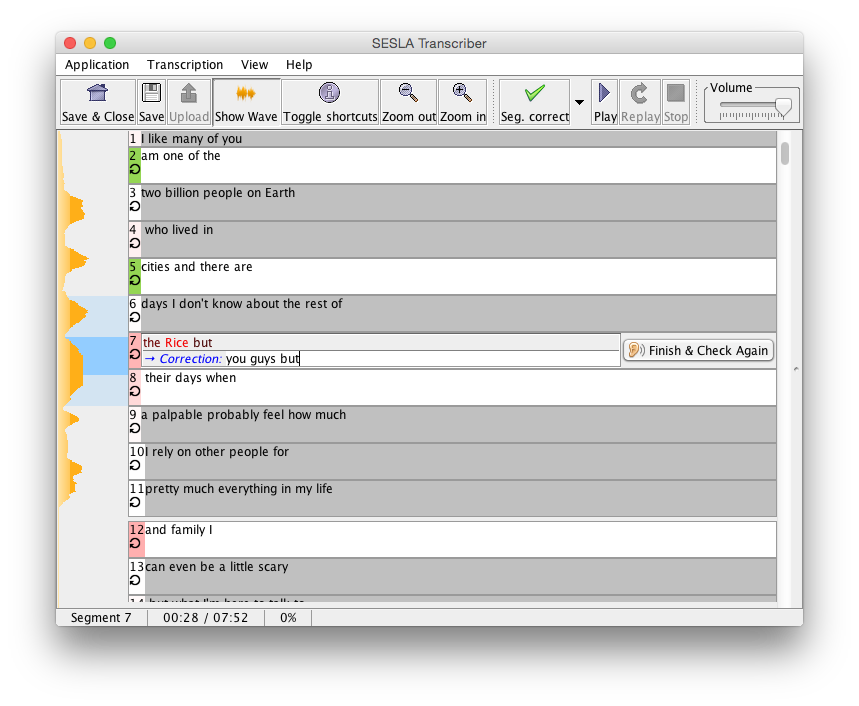

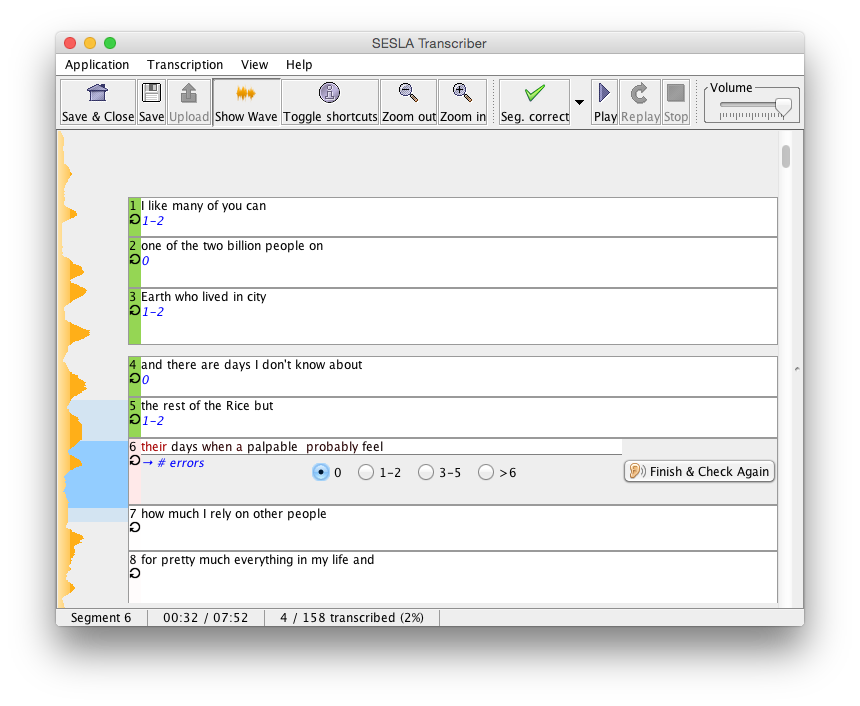

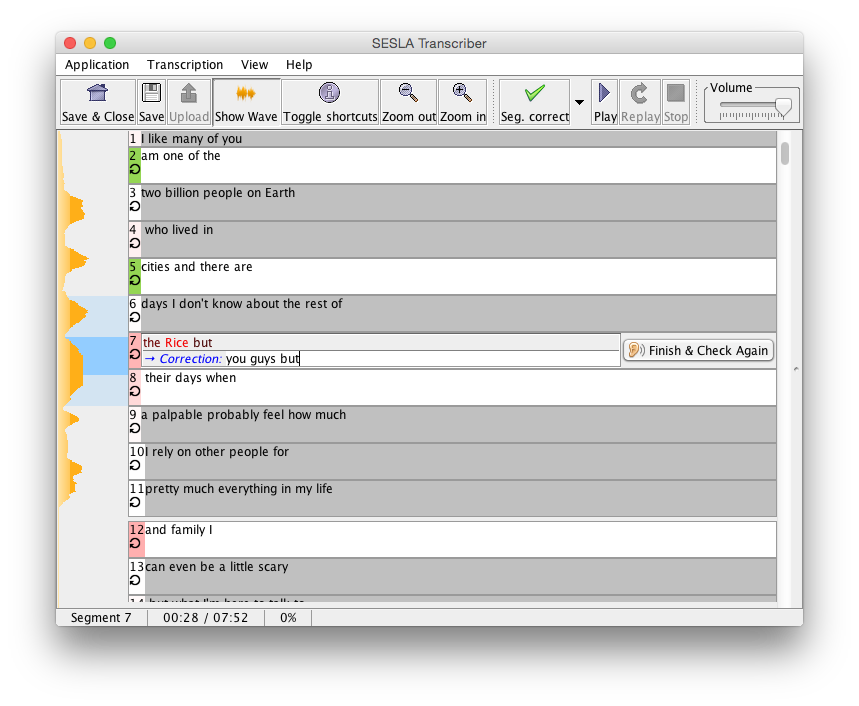

In budgeted transcription mode, the transcriber only corrects a subset of all segments, in order to save time. The most worthwhile segments are chosen automatically, by using word confidences and a cost model, and depending on the time budget. The screenshot shows an example, with the segments chosen for transcription displayed in white. Note that other parts of the initial transcript not chosen for correction are also displayed as gray segments in order to convey context, although they cannot be edited.

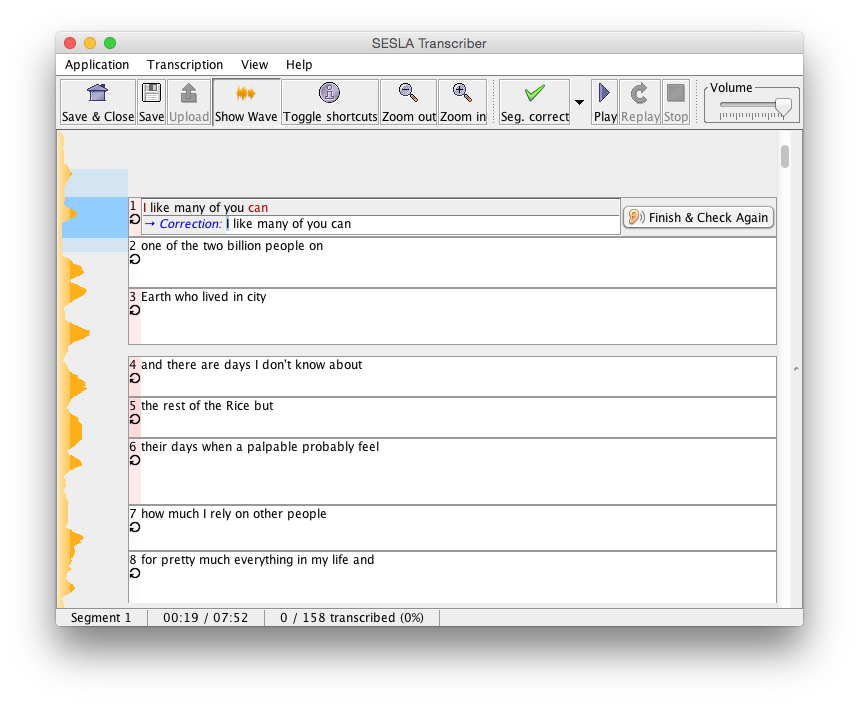

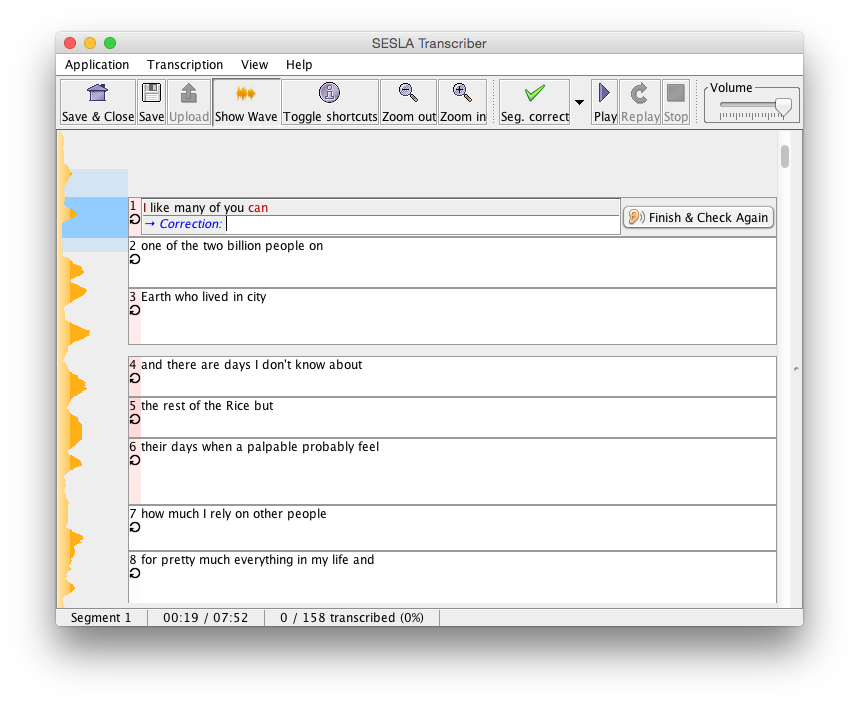

2.2 Editing of transcript: post-editing vs. from scratch approach

Sesla Transcriber features 2 different transcription modes: from-scratch and post-editing. In from-scratch style, text fields are initially empty, the whole transcription needs to be typed in by the transcriber. In post-editing style, the segment correction text fields are pre-populated with the initial transcription, and leave it to the transcriber to edit the erroneous parts. In our experience, post-editing style is a good choice for beginners who may at times feel unsure about their transcriptions. On the other hand, more experienced transcribers and fast typers may find from-scratch transcription more efficient to use. Another factor to consider is the quality of the initial transcripts. Studies have reported that above a word error rate of 10%-20%, transcribers often prefer from scratch transcription over post-editing.

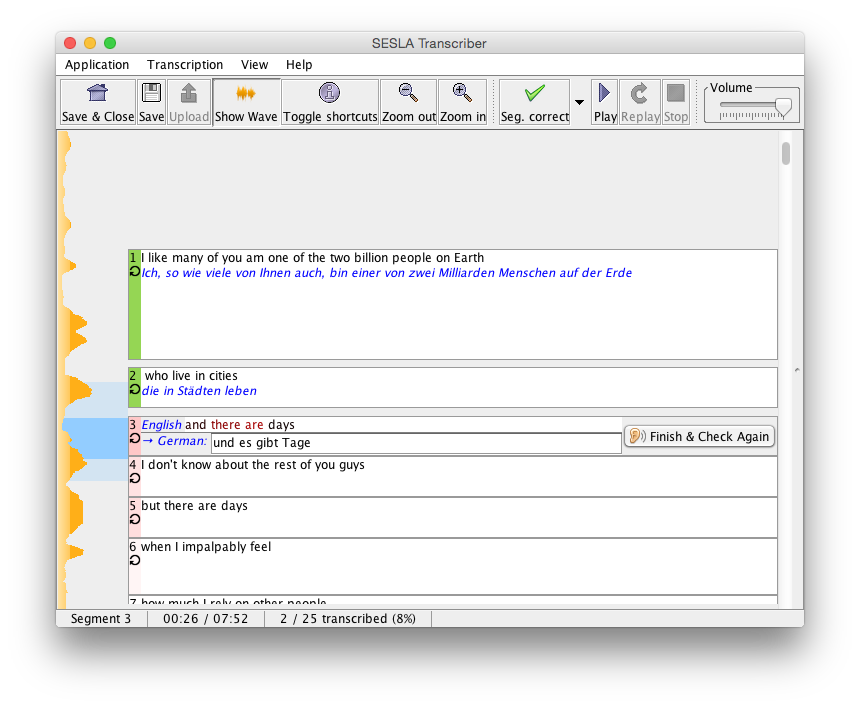

2.3 Labeling

Besides transcription, the tool also allows labeling of segments. Here, we understand labeling as annotating transcribed segments with additional (meta-)information, instead of editing the transcription itself. Example use cases are speech translation, sentiment annotation, or quality estimation by a human.

2.3.1 Speech translation example

2.3.2 Quality estimation example

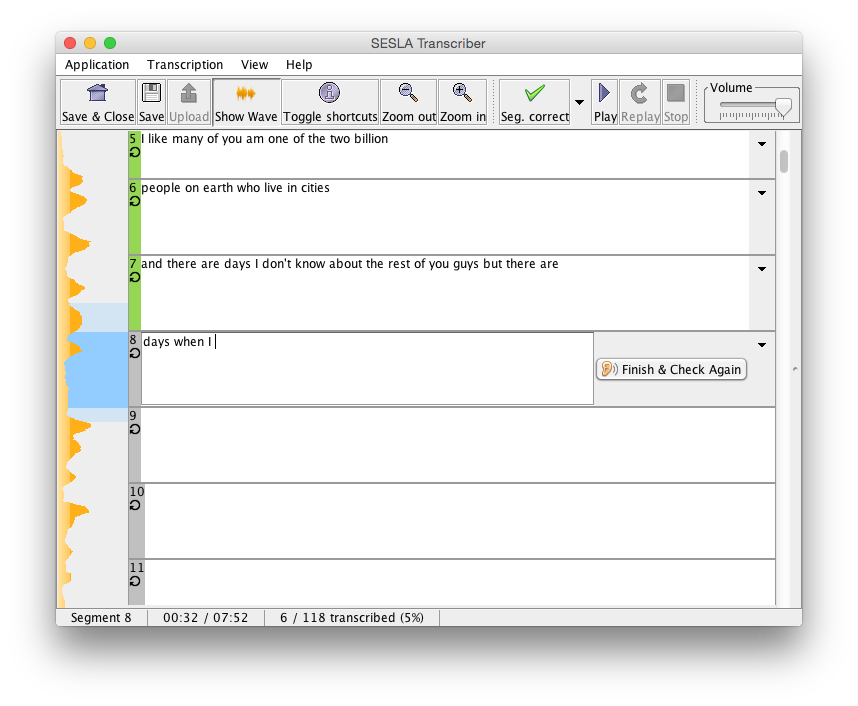

2.4 Transcription without initial transcript

Transcriptions can also be created when no initial transcript is available. In this case, the audio is chunked into 4-second segments, which are then transcribed one at a time. It is possible that this blind segmentation cuts words into two halfs, but this is no problem because for every segment a small amount of audio context from before and after the segment are played back (at reduced volume).

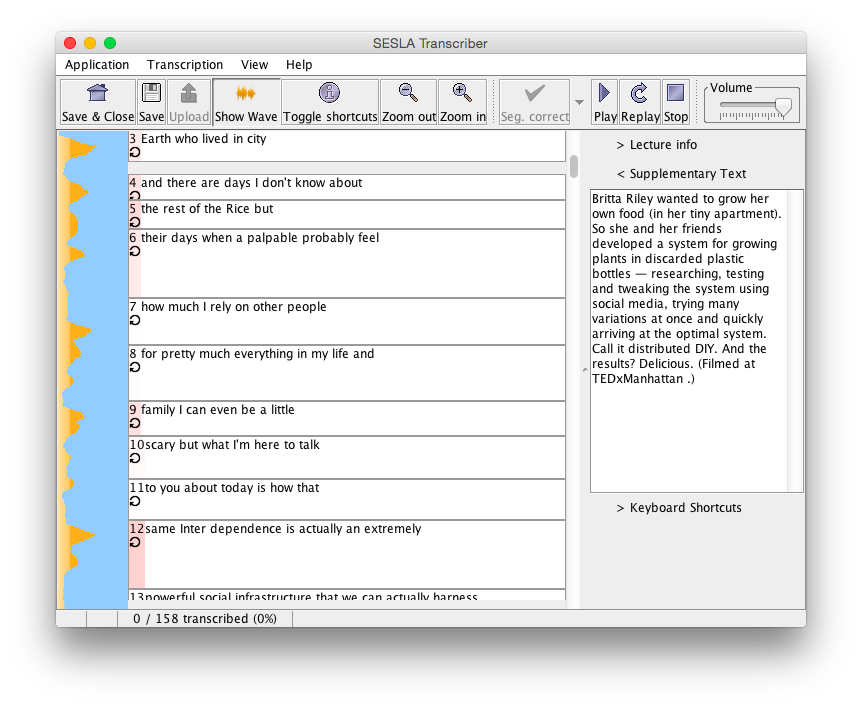

2.5 Supplementary text

In many practical situations, there is supplementary text material available that can help the transcriber a great deal, such as lecture notes, summaries, abstracts, or even just a short speaker biography. Such supplementary material can contain named entities and other difficult concepts, and can be included in the transcription screen for fast reference.

3 Getting Started

3.1 Download and System Requirements

Sesla Transcriber is available for download from here. It requires Java 7, and has been tested on Windows and Mac OS X platforms. Due to the buggy Java Sound API under Linux, we currently do not support Linux.

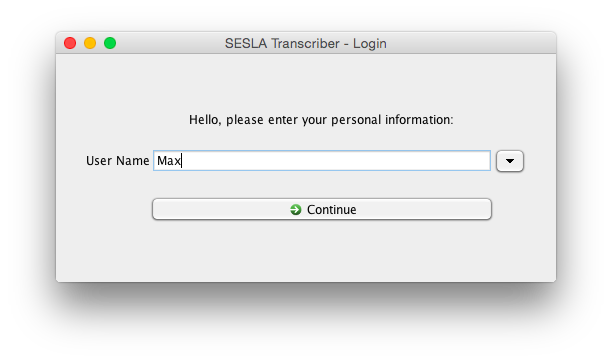

3.2 User profiles

The first screen that opens upon launching the tool asks for specification of a user profile. A unique user name must be provided, which will be used to identify the user profile. Each user profile is associated with a separate cost model, hence different users should not share a profile.

3.3 New projects

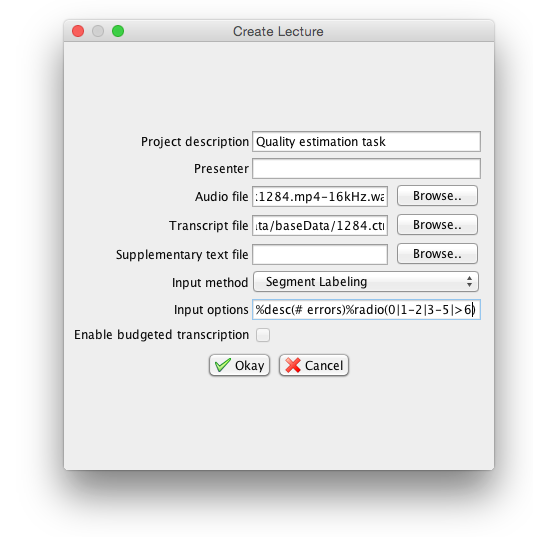

The next screen shows a list of transcription projects. To create a new project, click the button labeled “New transcription.” You will be asked to specify the following:

- Project description

- Presenter (optional)

- Audio file (a single file in .wav or .ogg format)

- Transcript file: .ctm file that can contain transcript- and or segmentation information, depending on the desired mode of use (see Section 5 for details of the file format). The .srt subtitles file format can also be used, in which case no budgeted transcription or automatic segmentation will be supported.

- To be able to use budgeted transcription, confidence-annotated words must be provided in the .ctm. Confidence scores lie between 0 and 1 and estimate the probability of this word being correct.

- For post-edit-style correction (see below), initial words must be provided. The segmentation to be used for correction can either be specified in the file, or created automatically (a prompt will appear later, in case no segments are provided).

- For from-scratch-style transcription, words of an automatic transcript are not strictly required, but if they are omitted, at least a segmentation of the audio for correction must be specified.

- Supplementary text file: a plain text file that can contain any kind of information that might be of help for the correction, e.g. a list of named entities, lecture abstract, etc., that will be displayed later in the description screen.

- Input method: Select between from-scratch (text boxes initially empty), post-editing (text boxes prepopulated with initial transcription), and labeling.

- Input options: Specify a short description displayed in front of the text box, the initial hypothesis. For labeling mode, the input type (text, numbers, categories, etc.) can be specified.

- Enable budgeted transcription: If checked, the available time budget will be prompted before starting to do the actual correction. If unchecked, all segments will be activated for transcription. Not available for labeling input method.

3.3.1 Input options syntax

Input options allow specifing descriptive labels displayed before hypothesis and correction. For labeling mode, the input type can be specified. Concatenate the following %-directives:

%desc(x)-> display ‘x’ before correction edit field.%hypodesc(x)-> display ‘x’ before hypothesis text.

Labeling input method only:

%text(x)-> Set input type to arbitrary text, prepopulate edit field with string ‘x’.%int()-> Constrain input to integers.%int(1)-> Integers with default value%double(0.1)-> Constrain input to floating number and (optionally) set default value.%radio(a|b|@c)-> Constrain input to exclusive categories a,b,c, and pre-select c (if no @ is specified, the first item is pre-selected)%check(a|@b|@c)-> Constraint input to non-excluxive categories a,b,c, and pre-select a and b (0 or more @s can be specified).

Transcription examples:

%desc(Corrected:)%desc(Corrected:)%hypodesc(ASR:)%text()

Labeling examples:

%desc(French:)%hypodesc(German:)%text()%desc(# errors:)%hypodesc(Transcription:)%int(0)%desc(# errors:)%radio(0|1-2|3-5|>6)

3.4 New projects without initial transcripts

If you have no initial transcript from a speech recognizer available, use the button “Transcribe w/o initial transcript” to create a new project. The audio will be segmented into 4-second segments to be transcribed one by one. Naturally, transcription will be from scratch and budget-less.

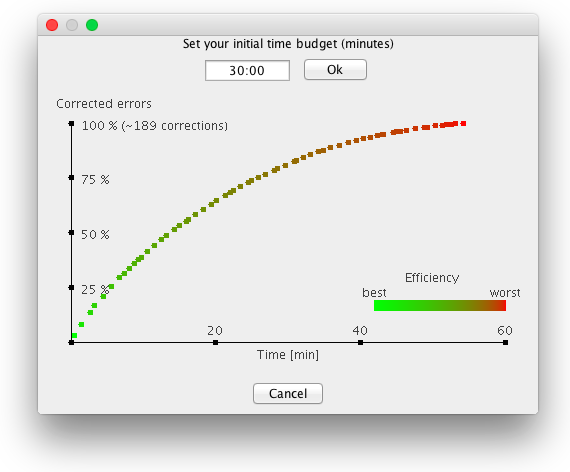

3.5 Choosing a time budget

If budgeted transcription is activated (not available for labeling), this screen will be shown prior to the actual transcription. The user is asked to specify how much time to spend on this particular transcription. A few seconds after the dialog is displayed, it will also show the estimated trade-off curve of how much time is needed to correct a certain proportion of the errors from the initial transcript. For first-time users, the estimation will be somewhat inaccurate as it relies on a transcriber-independent cost model. For users who have performed a few transcriptions, the cost model will be more accurate. The specified time budget will determine the number and length of segments that the user will be asked to transcribe or correct. The choice of segments will be updated regularly, so that as long as the cost model is still rather inaccurate, the choice of segments will be continually updated to ensure the time budget is met.

3.6 Transcribing

This figure shows an example for how the transcription interface looks. Segments are displayed as boxes and arranged vertically, next to a vertical waveform. Depending on the settings, there may be 2 types of segments: activated ones (white), and deactivated ones (gray). Activated segments are those that should be corrected or transcribed, while for deactivated ones, the initial transcription will be used, and no edits can be made. To convey context, the deactivated segments are displayed with their transcription shown. Usage is as follows:

- Deactivated segments can be played back via a simple click.

- Clicking on an activated segments starts audio playback, and allows editing their text. For better comprehension, the audio playback contains a bit of the audio from before and after the segment at a reduced volume. The segment to which the currently played back audio belongs is highlighted.

- After editing an active segment, a click on “Finish and check again” will play the segment, in order to encourage the user to double-check the correction.

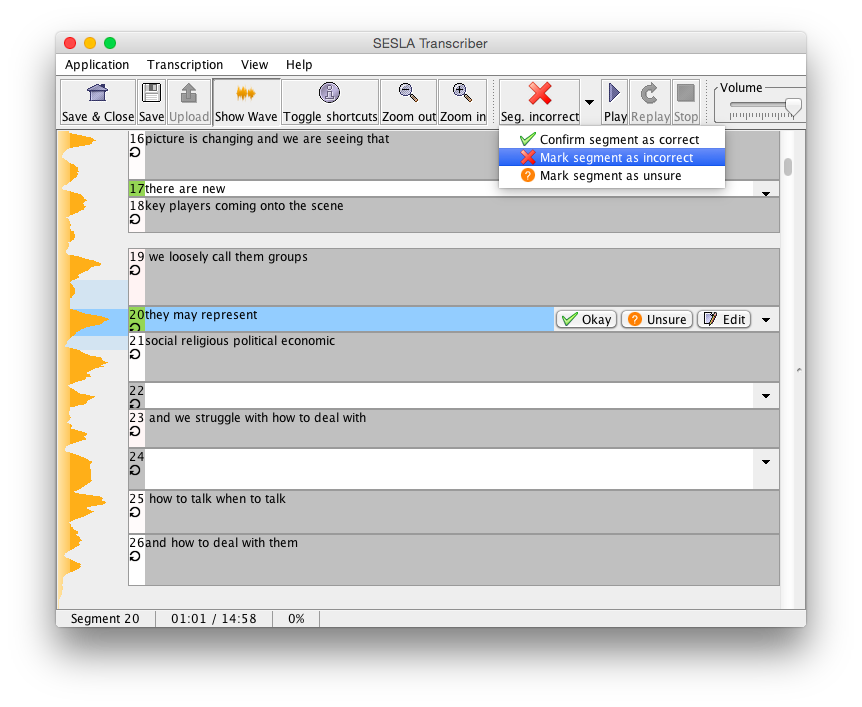

- As below figure shows, the user can either go back to editing the transcription, or mark the segment as correct or unsure. The drop down menu from the top bar also allows marking the segment as incorrect.

- Correct / unsure / incorrect segments are color-coded in green/orange/red, to allow the user to come back and for example attempt to transcribe unsure segments again. If confidences are given, unedited segments are color-coded with a color on a gradient between white an red, depending on their average word confidence.

- The segments’ audio can be repeated via the playback buttons at the top. There is also an auto-repeat feature, accessible via “Transcription” > “auto-repeat audio.” Activating this feature will play each segment’s audio in an infinite loop.

- To play back a longer piece of the speech, or parts of the audio that do not belong to a segment, the wave form can be clicked directly.

- The sidebar on the right reveals keyboard shortcuts that may be used for faster use

3.6.1 Some notes on updates for budgeted transcription

- Whenever finishing the transcription of a segment, an outlier check is performed, and if no outlier is detected, the recorded times are added to the training material of the current user profile’s cost model.

- Outlier criteria are:

- No audio playback or user activity for more than 5 seconds

- Transcription time is smaller than the audio playback time

- Needed more than 30 seconds per word for transcription

- After every 30 non-outlier segments, the cost model is retrained in the background.

- After the cost model is retrained, when the user starts entering the next segment, the segments of the parts of the transcript that have not yet been edited will be chosen anew, to reflect the updated cost model and actually remaining time budget. The new segments should be ready before finishing the segment, in rare cases the user may need to wait a few seconds.

- For cost model training, only the most recent 700 training samples are used, in order not to let training time grow unboundedly.

- The trained cost model is saved on disk and loaded when starting a new task. The same cost model will be used for all transcriptions of the same user. For optimal cost modeling accuracy, it may be advisable to decide for either post-editing or from-scratch correction for one user profile.

- The remaining time budget continually decreases during transcription. However, longer periods of inactivity will not be subtracted from the time budget.

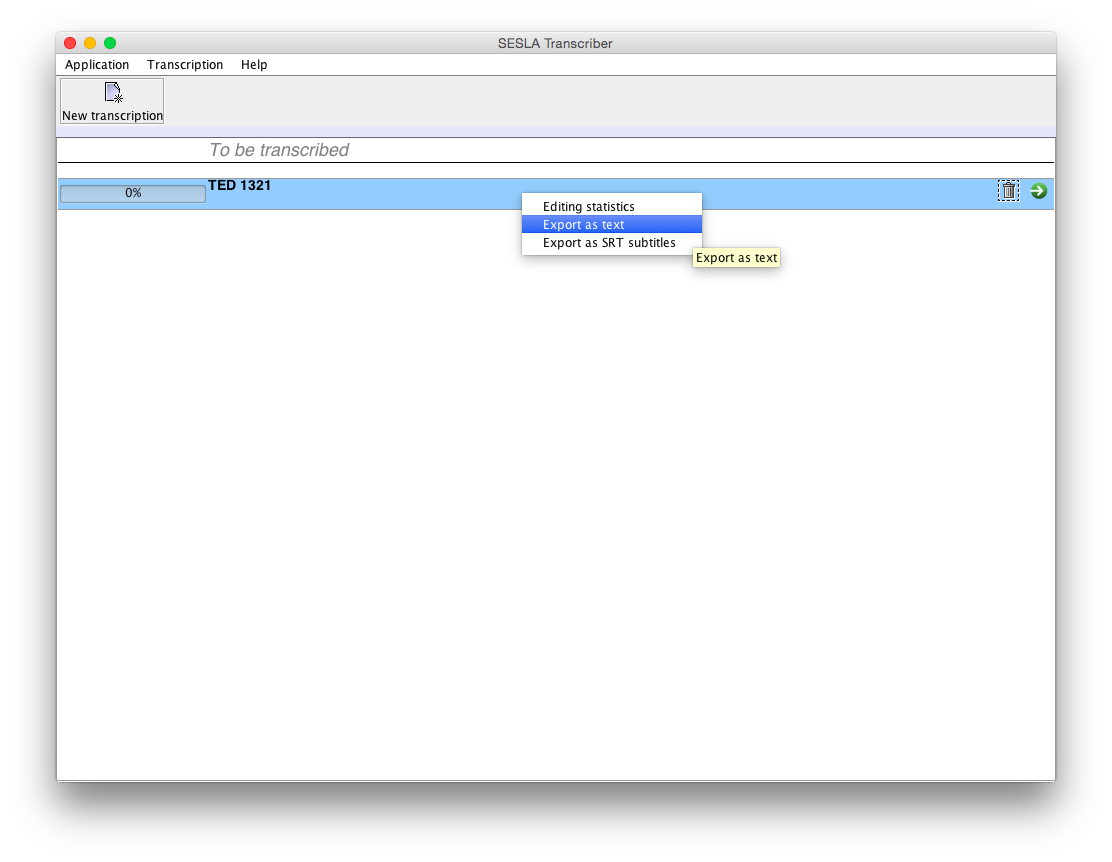

3.7 Exporting

After closing a transcription project, the context-menu in the list will allow export in plain text format, or in .srt subtitle format.

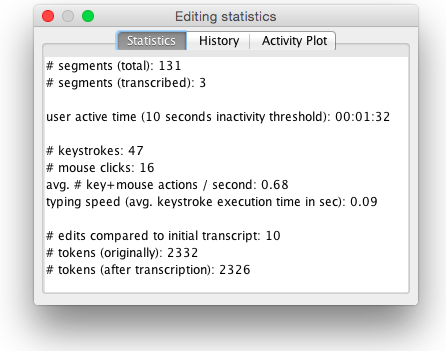

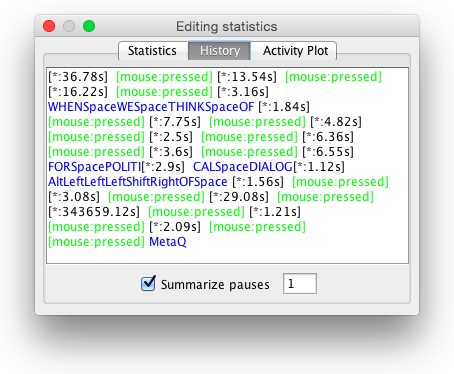

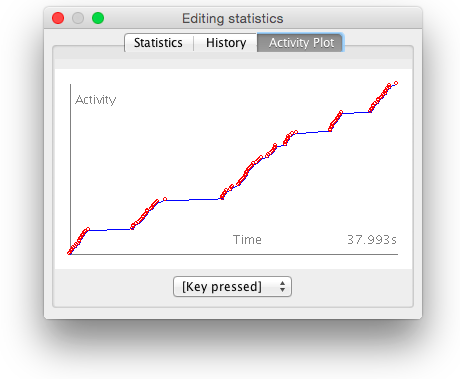

3.8 Transcribing Statistics

The same context menu also allows access to a view that summarizes transcription / editing statistics, which may be useful for research purposes.

4 How it Works

Sesla Transcriber is based on the on-the-fly-version of Sesla, described in [sperber2014slt].

5 File Formats

5.1 .ctm

A standard file format for speech recognition output. Contains lines corresponding to words, with a number of fields: talk- or segment-id, number of channels, starting point in seconds, duration in seconds, label, and a confidence score. The second field is not used by Sesla Transcriber and may be set to any value, and confidences may be omitted, but are required for budgeted transcriptions. Segment breaks can be indicated by inserting comment lines starting with #, or by altering the value of the first field (segment-id). If the string __INACTIVE_SEGMENT__ appears in a comment-line segment break, the following segment will be displayed but not editable. Also note that we filter filler-words such as <laugh> and $(<breath>) automatically. In addition, pronunciation variants such as and(NC-0) get normalized to and. Here is an example:

# segment break

ted-1321 1 30.32 0.13 to 0.96

ted-1321 1 30.46 0.22 go 0.97

ted-1321 1 30.69 0.11 to(NC-0) 0.98

ted-1321 1 30.81 0.11 the 1.00

ted-1321 1 30.93 0.54 source 0.99

ted-1321 1 31.48 0.11 or 0.75

# segment break

ted-1321 1 31.60 0.19 what(NC-0) 0.99

ted-1321 1 31.80 0.17 they're(NC-0) 0.95

ted-1321 1 31.98 0.11 all 0.97

ted-1321 1 32.10 0.54 about 1.00

# segment break __INACTIVE_SEGMENT__

ted-1321 1 32.65 0.30 $(<breath>) 1.00

ted-1321 1 32.96 0.22 and(NC-0) 0.99

ted-1321 1 33.19 0.10 to(NC-0) 1.00

ted-1321 1 33.30 0.61 understand 0.99

ted-1321 1 33.92 0.06 the 0.97

ted-1321 1 33.99 0.25 key 0.93

ted-1321 1 34.25 0.53 players 0.90

ted-1321 1 34.79 0.04 $(<breath>) 1.00

ted-1321 1 34.84 0.17 and(NC-0) 0.98

# s.b.

ted-1321 1 35.02 0.10 to 1.00

ted-1321 1 35.13 0.22 deal 0.79

ted-1321 1 35.36 0.14 with 0.99

ted-1321 1 35.51 0.22 them 1.00

ted-1321 1 35.74 0.17 $(pause) 1.00

...

Here is an equivalent example:

ted-1321-1 1 30.32 0.13 to 0.96

ted-1321-1 1 30.46 0.22 go 0.97

ted-1321-1 1 30.69 0.11 to(NC-0) 0.98

ted-1321-1 1 30.81 0.11 the 1.00

ted-1321-1 1 30.93 0.54 source 0.99

ted-1321-1 1 31.48 0.11 or 0.75

ted-1321-2 1 31.60 0.19 what(NC-0) 0.99

ted-1321-2 1 31.80 0.17 they're(NC-0) 0.95

ted-1321-2 1 31.98 0.11 all 0.97

ted-1321-2 1 32.10 0.54 about 1.00

# __INACTIVE_SEGMENT__

ted-1321-3 1 32.65 0.30 $(<breath>) 1.00

ted-1321-3 1 32.96 0.22 and(NC-0) 0.99

ted-1321-3 1 33.19 0.10 to(NC-0) 1.00

ted-1321-3 1 33.30 0.61 understand 0.99

ted-1321-3 1 33.92 0.06 the 0.97

ted-1321-3 1 33.99 0.25 key 0.93

ted-1321-3 1 34.25 0.53 players 0.90

ted-1321-3 1 34.79 0.04 $(<breath>) 1.00

ted-1321-3 1 34.84 0.17 and(NC-0) 0.98

ted-1321-4 1 35.02 0.10 to 1.00

ted-1321-4 1 35.13 0.22 deal 0.79

ted-1321-4 1 35.36 0.14 with 0.99

ted-1321-4 1 35.51 0.22 them 1.00

ted-1321-4 1 35.74 0.17 $(pause) 1.00

...

5.2 HTK .mlf label files

Label files in HTK’s .mlf style are supported. These are assumed to be text files with the following fields: Start time (in 100 ns), end time (in 100 ns), word, optional confidence score, optional segment-boundary marker. Segments can be declared by defining additional fields in a second level. Alternatives (/// - trip slash - notation) are not supported. Inactive (non-editable) segments can be declared, as with .clm files, this is an equivalent example to the above:

303200000 304500000 to 0.96 segment-break

304600000 306800000 go 0.97

306900000 308000000 to(NC-0) 0.98

308100000 309200000 the 1.00

309300000 314700000 source 0.99

314800000 315900000 or 0.75

316000000 317900000 what(NC-0) 0.99 segment-break

318000000 319700000 they're(NC-0) 0.95

319800000 320900000 all 0.97

321000000 326400000 about 1.00

326500000 329500000 $(<breath>) 1.00 __INACTIVE_SEGMENT__

329600000 331800000 and(NC-0) 0.99

331900000 332900000 to(NC-0) 1.00

333000000 339100000 understand 0.99

339200000 339800000 the 0.97

339900000 342400000 key 0.93

342500000 347800000 players 0.90

347900000 348300000 $(<breath>) 1.00

348400000 350100000 and(NC-0) 0.98

350200000 351200000 to 1.00 S.B.

351300000 353500000 deal 0.79

353600000 355000000 with 0.99

355100000 357300000 them 1.00

357400000 359100000 $(pause) 1.00

...

5.3 .srt

Subtitle file format, as described here: http://en.wikipedia.org/wiki/SubRip

5.4 supplementary text files

Plain text files; can contain <strong>tags</strong> that get highlighted.

5.5 User model data

User model training samples are stored under profiles/<username>/umExamples.txt . There is currently no GUI support to transfer a user’s cost model to a different profile, but it easy to do by simply copying this file to profiles/<new username>/umExamples.txt